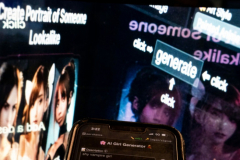

WASHINGTON – When Ellis, a 14-year-old from Texas, woke up one October earlymorning with anumberof missedouton calls and texts, they were all about the verysame thing: naked images of her flowing on social media. That she had not infact taken the photos did not make a distinction, as synthetic intelligence (AI) makes so-called “deepfakes” more and more practical. The images of Ellis and a pal, likewise a victim, were raised from Instagram, their dealswith then put on naked bodies of other individuals. Other trainees — all women — were likewise targeted, with the composite pictures shared with other schoolmates on Snapchat. “It looked genuine, like the bodies looked like genuine bodies,” she informed AFP. “And I keepinmind being actually, actually scared… I’ve neverever done anything of that sort.” As AI has flourished, so has deepfake porn, with hyperrealistic images and videos produced with verylittle effort and cash — leading to scandals and harassment at numerous high schools in the United States as administrators battle to respond inthemiddleof a absence of federal legislation prohibiting the practice. “The ladies simply sobbed, and sobbed permanently. They were really embarrassed,” stated Anna Berry McAdams, Ellis’ mom, who was stunned at how reasonable the images looked. “They didn’t desire to go to school.” – ‘A mobilephone and a coupleof dollars’ – Though it is tough to measure how extensive deepfakes are endingupbeing, Ellis’ school exterior of Dallas is not alone. At the end of the month, another phony nudes scandal emerged at a high school in the northeastern state of New Jersey. “It will takeplace more and more typically,” stated Dorota Mani, the mom of one of the victims there, likewise14 She included that there is no method to understand if adult deepfakes may be drifting around on the web without one’s understanding, and that examinations typically just occur when victims speak out. “So numerous

Read More.