Demis Hassabis, Google Deepmind CEO, just told the AI world that ChatGPT’s path needs a world model. OpenAI and Google and XAI and Anthropic are all using the LLM (Large Language Model Approach). Google Genie 3 system, released last August, generates interactive 3D environments from text.

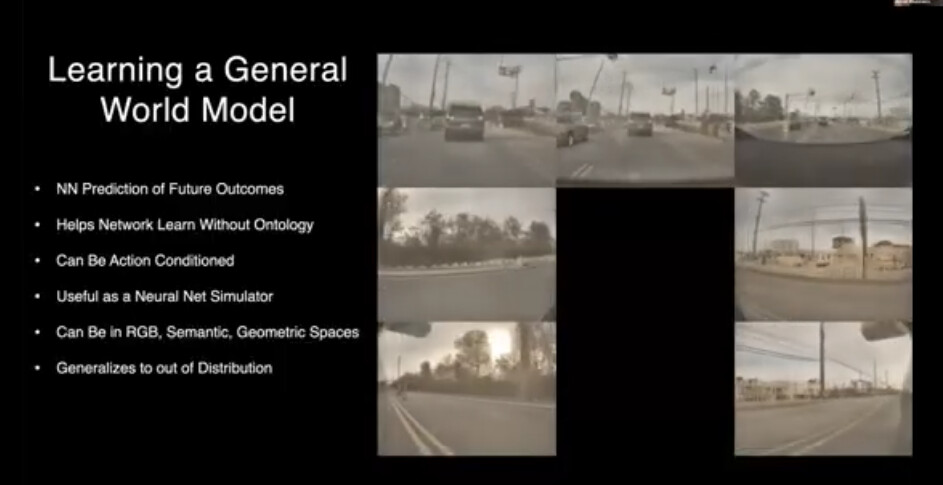

Here is my review of the state of the art on world models. There is also the need continual learning and integrated memory. Tesla with FSD has for two years projected out split second by split second projected frames for the eight driving cameras. They do it for simulation and to project what might happen for driving.

Google Deepmind and Tesla/XAI have advanced world models and are working on integration.

Tesla and XAI have advanced them for optimus humanoid robots. Tesla has a massive portfolio in AI for autonomous driving (hundreds filed/granted, many in 2025 focusing on neural networks, data pipelines, vision processing, and simulation). Some touch on generative/synthetic data or scene understanding that could relate to world models (patents on neural network adaptation, visual data processing, or simulation).

Optimus (Tesla’s humanoid robot) uses similar principles and leverages vision-based end-to-end models for manipulation, navigation, and task planning, potentially extending FSD-like world models to embodied robotics. They are predicting physics in human-like environments. Need split second reaction time.

Humanoid bot competitors (1X with its “world model” for Neo robot, reducing teleop reliance) discuss world models openly, but Tesla does not publish papers or share code.

Nvidia and its world simulators also have significant work. Nvidia works with most of the other humanoid bot companies and provides them with simulators and tools.

Fei Fei Li and world labs have good theory.

CHAPTERS:

05: 26 Demis Hassabis on AI’s Current Capabilities and Future

09: 56 Demis Explains How AI Learns Physical Reality

12: 09 Demis Predicts AGI in 5-10 Years, Addresses Energy

15: 25 Societal Transformation, Disruption, and Personal Unwind

22: 06 Hosts Debate AI’s Future: Language, Physics and Robots

26: 28 Google’s Comeback in the Intense AI Race

28: 28 Demis on Market Exuberance and Google’s Financial Strength

32: 19 Geopolitics: China’s Rapid Catch-Up in AI

34: 27 Hosts Analyze China’s AI and Google’s Financial Edge

38: 08 How DeepMind Powers Google’s AI Products and Edge Devices

41: 17 Demis Reflects on Google’s Vision and NVIDIA Partnership

45: 05 Demis’s Vision for AI’s Golden Age of Discovery

47: 13 Hosts Discuss Google AI’s Advantage and OpenAI Pressure

AI Agents inside simulated worlds can outperform regular agents by about 20-30% on reasoning tasks.

Hassabis’s views on world models, their necessity for AGI, his definition/vision of AGI, and related concepts (limitations of current LLMs, needed breakthroughs, timelines).

Tesla’s FSD (especially v12+ onward) relies heavily on end-to-end neural networks and what are often referred to internally/externally as world models — these are embodied AI systems that simulate/predict real-world physics, scenes, trajectories, and actions from vision data for driving planning and control.

Tesla has discussed world models in contexts like occupancy networks, synthetic data generation, and simulation for training (Ashok Elluswamy keynotes at CVPR 2023 and ICCV 2025 workshops, where Tesla explores world models for autonomous driving and potential robotics extensions.

Demis Hassabis on AI’s Current Capabilities and Future

Hassabis said scaling laws STILL remain effective. More compute, data, and larger models yield meaningful capability gains. The gains are slower than the peak years but are still far from zero returns.

Current AI systems show jagged intelligence—excelling in some areas (language, certain reasoning) but failing inconsistently on others (simple tasks if phrased differently).

Missing capabilities for true generality. continual/online learning, true originality/innovation, consistent performance, long-term planning, better reasoning.

To reach AGI, scaling alone may not suffice. One or two major innovations (like AlphaGo-level breakthroughs) are likely still needed beyond current architectures.

Demis Explains How AI Learns Physical Reality

World models are a core passion and a likely missing piece. AI must build internal simulations of the world’s physics, causality (how one thing affects another), and higher-level domains (biology, economics) to understand reality deeply.

LLMs/foundation models (Gemini) handle multimodal data (text, images, video, audio) but lack true understanding of physics, causality, long-term planning, or hypothesis testing via mental simulation.

Humans (especially scientists) use intuitive physics and mental simulations to test ideas; current AI cannot generate novel hypotheses or new scientific conjectures independently.

DeepMind’s work includes early/embryonic world models like Genie (interactive world generation) and video models (Veo), which imply understanding—if realistic generation is possible, the model grasps world dynamics.

Vision: Future AGI will converge foundation models (like Gemini) with world model capabilities for integrated, powerful systems (not world models fully superseding LLMs, but enhancing them).

Demis Predicts AGI in 5-10 Years

Addresses Energy and AGI definition/vision. A system exhibiting all human cognitive capabilities—true innovation/creativity, planning, reasoning, consistent/general performance across domains, continual learning, and the ability to understand/explain the world (new scientific theories via simulation/hypothesis testing). It goes beyond passive prediction to active understanding, invention, and autonomous action.

Still 5–10 years away (consistent with DeepMind’s 2010 founding vision of a ~20-year mission. Progress has accelerated dramatically).

Bottlenecks are Compute/chips shortages, energy constraints (intelligence increasingly tied to energy availability).

AI itself aids solutions (efficiency gains, better materials/solar, fusion plasma control via collaborations like Commonwealth Fusion, room-temperature superconductors).

Efficiency improvements for Models like Gemini Flash use distillation (big models teach smaller ones) for 10x better performance per watt annually.

Societal Transformation, Disruption, and Personal Unwind

AGI impact will be transformative like the Industrial Revolution but 10x bigger and 10x faster—massive benefits (curing diseases via Isomorphic Labs/AlphaFold spinouts, energy breakthroughs, solving climate/poverty/water/health/aging).

The AGI risks are economic disruption (need new models), bad actors misusing AI, loss of control in autonomous/agentic systems (guardrails essential).

Hassabis is cautiously optimisc. He believes in human ingenuity/safety focus (DeepMind planned for powerful systems from 2010, uses scientific method for understanding/deploying responsibly). No slowdown advocated due to geopolitical/corporate race dynamics; prioritize responsible frontier-pushing as role model.

Hosts Debate AI’s Future: Language, Physics and Robots

LLMs strong on language but weak on physical world understanding (causality, robotics needs).

World models rising in importance for robotics, autonomous driving. Convergence with LLMs could enable true generality.

Criticism of LLMs: Limitations in novelty/original ideas (echoing LeCun’s views). World models address this by enabling simulation-based hypothesis testing.

Robotics challenge and Training agents (world models key for autonomous operation beyond teleop puppets).

Google’s Comeback in the Intense AI Race

Google caught up/surpassed in some areas (Gemini series on leaderboards).

Google Reorg integrated research (Google Brain + DeepMind under Hassabis). Scrappier commercialization and tight loop with Sundar Pichai for roadmap alignment.

Demis on Market Exuberance and Google’s Financial Strength

AI bubble is not binary. There is some overvaluation and some seed rounds with little substance). However, fundamentals are strong. It is transformative technology like the internet and electricity.

Google has a strong balance s